Operating Systems - Mechanisms & Policies

Recently, I’ve been reading Operating Systems: Three Easy Pieces 1 by Andrea Arpaci-Dusseau and Remzi Arpaci-Dusseau.

This book covers three fundamental aspects of Operating Systems. Those aspects being Virtualization, Concurrency, and Persistence.

Each of these pieces encapsulate a wide array of complex systems. These systems are usefully decoupled into many parts. Two of those parts being Mechanisms and Policies.

You can think of the mechanism as providing the answer to a how question about a system; for example, how does an operating system perform a context switch? The policy provides the answer to a which question; for example, which process should the operating system run right now? (Arpaci-Dusseau & Arpaci-Dusseau, 2018)

The distinction between the two pieces offers a nice abstraction for those developing and using an Operating System. Mechanisms are algorithms that allow the OS to function effectively with low level components. Policies are the guidelines that our OS uses to make high level decisions relating to those systems.

Mechanisms and Policies also help us evaluate the quality of an Operating System.

How do the mechanisms in one Operating System compare to the next? What kind of trade-offs occur when you select one policy over another?

The idea of Mechanisms and Policies is highly adaptable. The purpose of this blog is to provide a small overview on how it’s used in the context of Operating Systems.

Mechanism Example

Assuming we have a single core CPU, how does the OS manage control of this essential resource? We can allow each process access to the CPU through a method known as Limited Direct Execution.

Limited Direct Execution allows each process it’s own time slot on the CPU. This comes with great risks that become alleviated via tactics mentioned in the book. 1 We will not be covering those in this blog. Let’s assume we let process 1 run directly on the CPU and a new job, process 2, enters the queue. How do we change from process 1 to process 2?

The OS must regain power from the running process via an interrupt. Then the OS will perform a context switch to get to process 2.

A context switch is a mechanism.

A context switch is conceptually simple: all the OS has to do is save a few register values for the currently-executing process (onto its kernel stack, for example) and restore a few for the soon-to-be-executing process (from its kernel stack). (Arpaci-Dusseau & Arpaci-Dusseau, 2018)

A high level procedure for a context switch from process 1 to process 2 would look something like this, 1

1.) Interrupt occurs from hardware or system call.

2.) Save user registers for P1 to P1’s kernel stack.

3.) Switch from user mode to kernel mode.

4.) jump to the trap handler

5.) Execute trap handler logic defined by the OS.

6.) Save kernel registers for P1 to P1’s Process Control Block (PCB).

7.) Restore P2’s registers from P2’s Process Control Block.

8.) Switch to P2’s Kernel Stack.

9.) Return from trap into P2.

10.) Restore P2’s registers from the Kernel Stack.

11.) Switch to User Mode.

12.) Jump to Process 2.

Note that this procedure can vary among different architectures.

Most importantly, we have a defined procedure for switching from one process to another. How the switch is made from process 1 to process 2 is separated from why it’s made. The latter being the policy and the former being the mechanism.

Policy Example

We’ve now discussed how the CPU can switch from one process to another. Let’s proceed to discuss the decision making behind selecting which job to run.

How do you manage many jobs utilizing the CPU with great response and turnaround time? This situation is like a loaded restaurant on a Saturday night with only one server. Do you want to get customers in and out as quickly as possible (turnaround time), or do you want to minimize your response time to each customer?

We need high level policies to dictate which job should get to use the CPU at any given time. These are known as scheduling policies.

A very simple scheduling policy is known as First In, First Out (FIFO). This is a non-preemptive 2 scheduling approach that prioritizes incoming jobs on a first to show basis.

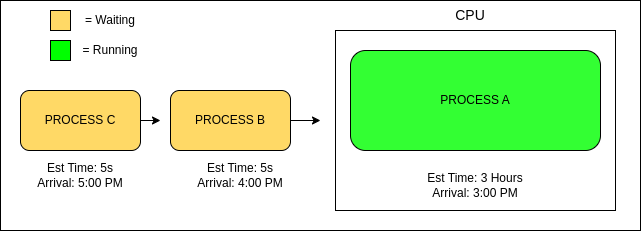

Here’s a simple illustration,

This shows 3 jobs in the OS Scheduler. According to FIFO, process A showed up at 3:00 p.m. and will rightfully get it’s spot on the CPU at that time. We can imagine that process A is a CPU intensive batch job and doesn’t leave the CPU for 3 hours. When process C and process B show up at 5:00 p.m. and 4:00 pm respectively, they are both starved until process A releases the CPU.

FIFO is one possible answer to the question, which job should run on the CPU at any given moment? FIFO says, it should be the one that showed up first.

We can see why this may not be a great option (potential for high turnaround and response time), but luckily we have a choice here. Researchers have answered this question in a variety of ways: FIFO, SJF, STCF, CFS, BFS, and many more.

As you can see the scheduling policy asks the engineer to make a decision, a decision that often incurs sacrifices (e.g. quicker response time for longer turnaround time).

References

-

Arpaci-Dusseau, R. H., & Arpaci-Dusseau, A. C. (2018). Operating systems: Three easy pieces. Arpaci-Dusseau Books. ↩ ↩2 ↩3

-

Meaning that if we have a massive job (process A) on the CPU and a smaller job (process B) show up in the queue, process B will have to wait patiently while process A finishes (completes or switches to a non-running state). ↩

Enjoy Reading This Article?

Here are some more articles you might like to read next: